If you run a WordPress site, chances are you want to control which pages appear in search results — and which don’t. Some pages should be hidden from search engines to avoid duplicate content, private data exposure, or low-value indexing.

With the latest update of the Sitemap plugin by BestWebSoft, you can now manage robots meta directives and robots.txt exclusions directly from your post or page editor — no extra plugins or code required.

In this article, we’ll explain the difference between robots.txt and meta name=”robots” tags, what each directive means (noindex, nofollow, etc.), when to use them, and how to activate this feature.

What Are Robots Meta Tags and Why Do They Matter?

Google and other search engines use robots meta tags to understand how they should treat a specific page during crawling and indexing.

By default, search engines index all accessible pages. But in many cases, you may want to prevent a specific page from being indexed or stop bots from following its links.

Here’s what you can control:

| Directive | Description |

| noindex | Tells search engines not to index the page — it won’t appear in results |

| nofollow | Tells bots not to follow any links on the page |

| none | Equivalent to noindex, nofollow |

| nosnippet | Prevents display of text snippets or video previews |

| noimageindex | Blocks indexing of images on the page |

| notranslate | Disables automatic translation suggestions in search results |

| indexifembedded | Allows indexing only if embedded via iframe (used with noindex) |

ℹ️ Learn more from Google’s documentation.

robots.txt vs Robots Meta Tags: What’s the Difference?

Both robots.txt and robots meta tags help guide how your site is crawled and indexed — but they work in different ways:

✅ robots.txt

Placed at the root of your domain, this file tells crawlers which URLs or folders not to crawl. But it does NOT prevent indexing if the page is linked elsewhere.

Example:

User-agent: *

Disallow: /private/✅ Meta Robots Tag

Added to the <head> of a page, this tag tells crawlers how to handle the page once crawled. For example, noindex tells Google: “You can crawl this, but don’t show it in search results.”

Why Use Noindex or Nofollow on WordPress Pages?

There are many cases where hiding a page from search engines is useful:

- ✅ Prevent indexing of thank-you or checkout pages

- ✅ Avoid duplicate content issues (e.g. tag archives, author pages)

- ✅ Block low-quality or temporary content

- ✅ Stop bots from crawling links you don’t want to share SEO authority with

- ✅ Protect media attachments or internal resources

- ✅ Clean up your sitemap by excluding pages that don’t add SEO value

New Feature in Sitemap Plugin by BestWebSoft

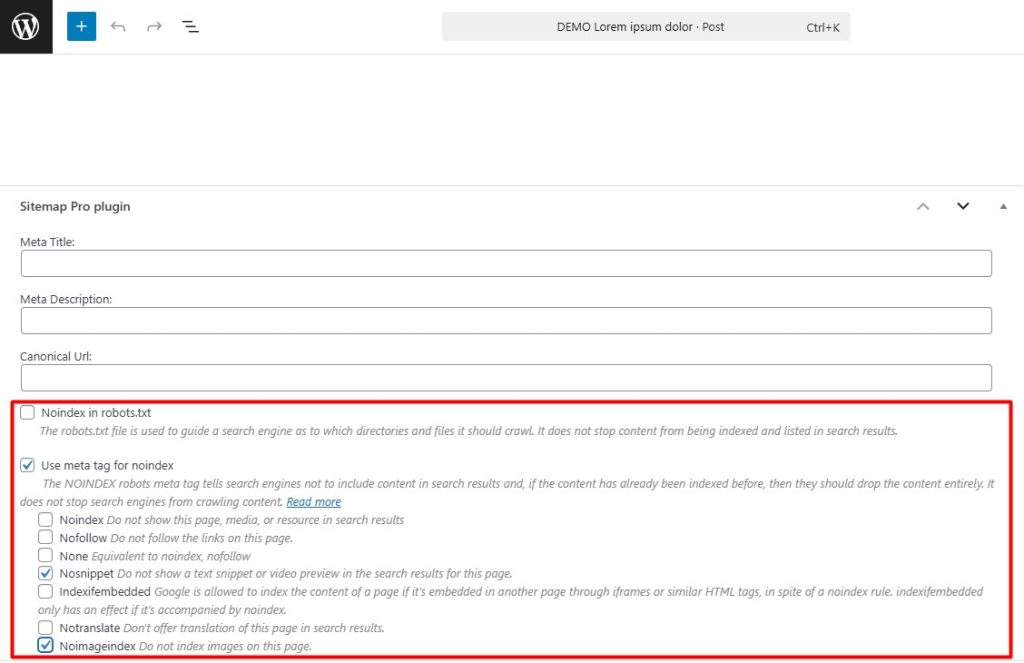

The Sitemap plugin now includes a simple, checkbox-based interface to apply robots directives per page or post.

You can now:

- Add a meta name=”robots” tag with custom settings

- Update your robots.txt file dynamically

- Select the exact pages, posts, or media to exclude from indexing

No need to manually edit files or install extra SEO plugins — it’s all built-in.

2. Open Any Post or Page in the WordPress Editor

Scroll down to find the “Sitemap Pro” meta box.

3. Choose Where to Apply Noindex

You’ll see two sections:

Noindex in robots.txt

Disallows crawling in robots.txt (not recommended for indexed content)

Use meta tag for noindex

Adds a meta robots tag directly into the page <head>. This is the preferred method for controlling indexing.

4. Select the Directives You Need

Check the boxes for one or more of the following:

- ☑ Noindex – Do not include this page in search results

- ☑ Nofollow – Do not follow links on this page

- ☑ None – Shortcut for both noindex and nofollow

- ☑ Nosnippet – Hide text and video previews

- ☑ Noimageindex – Prevent image indexing

- ☑ Notranslate – Hide translation options in results

- ☑ Indexifembedded – Allow indexing only when embedded

📸 Example Screenshot

5. Save and Publish

Click Update or Publish — the changes will now be reflected either in the robots.txt file or the page’s <head> section.

You can confirm using the View Source tool or an SEO browser plugin.

Conclusion: Take Full Control of Your SEO

With the new robots directives feature in the Sitemap plugin, you no longer need complex SEO plugins just to hide a page or control crawling. Whether you want to keep thank-you pages private, stop image indexing, or prevent low-value links from being crawled — you can do it all with a few clicks.

🎯 Ready to fine-tune your site’s SEO visibility?

Download the Sitemap plugin by BestWebSoft and manage indexing like a pro — page by page.

Need help with setup or best practices? Contact our support team.